Webinar series for ergonomics and periodontal instrumentation by RDH Tatiana Brandt. Learn about the risk factors for musculoskeletal disorders associated with periodontal instrumentation and how to prevent them.

Webinar recordings from September-October 2020, part 1/3.

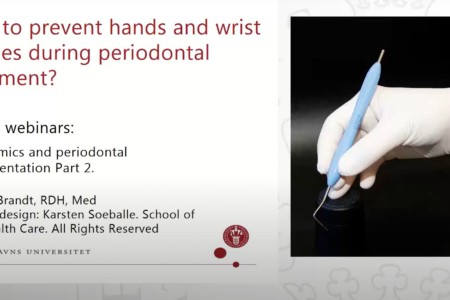

Musculoskeletal Disorders Among Dental Professionals. Hand and Wrist Problems.

- Ergonomic risk factors associated with periodontal instrumentation

- Symptoms related to work-related injuries in hands, wrists and fingers. Carpal Tunnel Syndrome.

- The prevalence of musculoskeletal complaints in dental professionals

The views and opinions expressed herein are those of the presenters and do not necessarily reflect the views of LM-Dental. The content is provided solely for informational purposes and does not constitute medical advice or intended to be used as healthcare recommendations.

Speaker

Tatiana Brandt, RDH Med (Master Degree in Health Education and Health Promotion)

- I have been practicing clinical dental hygiene for 17 years, and worked for the Department of Odontology and Periodontology at the Copenhagen School of Dentistry.

- Board member of Danish Society of Periodontology.

- For the last 10 years, I have been clinical lecturer at the School of Oral Health Care, Faculty of Health and Medical Science, University of Copenhagen.

- Speaker and course provider for LM-Dental™ and Plandent.

- My main interest is advanced periodontal instrumentation, ergonomics and prevention of MSD in hands and wrists